- Posted on : February 28, 2020

-

- Industry : Corporate

- Service : Cloud Services

- Type: Blog

Provisioning for complex infrastructures with consistency, reliability, and security is a daunting task for many organizations. Practitioners today are looking for smarter, more efficient, and more automated approaches to setting up, tearing down, and managing infrastructure environments.

Infrastructure as a Code (IaaC) is the modern approach for managing complex infrastructure provisioning through a well-defined, modular, software scripting model. Most cloud platform providers like Google Cloud Platform (GCP), Amazon Cloud Services (AWS), and others have modeled their approach based on infrastructure expressed as code. GCP’s Deployment Manager and AWS’s Cloud Formation are two such examples.

Terraform, a HashiCorp product, is a widely supported, easy-to-use and well-documented IaaC tool with a considerable client base. Terraform is an open-source tool that codifies APIs into configuration files that can be shared within teams, reused, edited, and versioned. It is a fast, efficient, and cost-effective way of provisioning your infrastructure. Using Terraform as part of your deployment process has several immediate benefits to your workflow, including speed, reliability, and experimentation.

At Infogain, we have built a Cloud Center of Excellence that helps customers in their adoption of, and migration to, cloud platforms. Our experience and work in IaaC have resulted in a paradigm shift leading in consideration of Infrastructure as a set of well-written code that is repeatable, secure, and towards the direction of DevSecOps as a culture.

While providing IaaC services to many of our customers, we have come up with a set of best practices for writing proper, reusable, and efficient Terraform coding. Shown below are some of the best practices we recommend for building a smart Terraform script. We have included code snippets for a clear understanding.

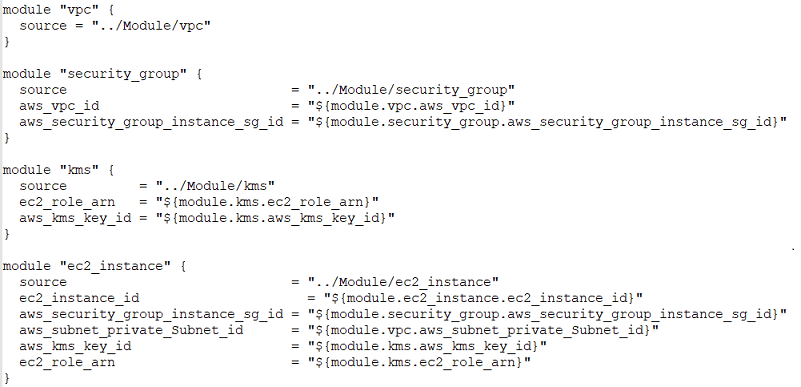

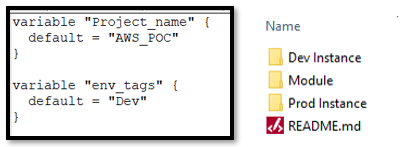

1) Modular and multi-environment setup: Writing Terraform scripts in a Modular structure is useful when multiple environments are applicable, development/pre-production and production, require different variables to be used. It allows for code to be stored in reusable modules, with variables being stored separately to build the infrastructure for each environment. Each Terraform module thus defined in this modular setup is a referenceable, reusable resource, and can be set for a multi-environment configuration. The image shows how we can design the resources in different modules.

[Modular Code Setup]

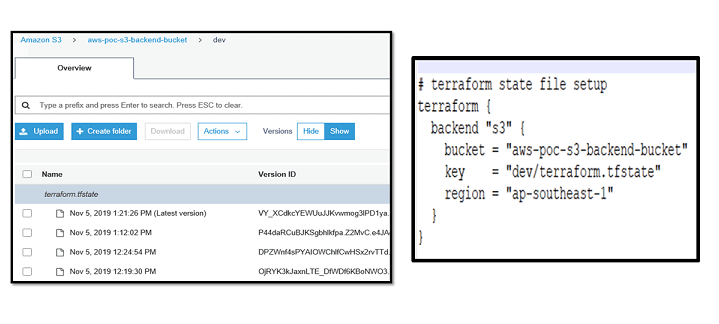

2) State Persistence: State should persist between plan/apply cycles as it represents the known configuration of the infrastructure from the last time Terraform was run, and this state can have sensitive information such as keys, passwords, etc. We recommend using GCS or AWS S3 buckets to store the backend state files. In the case of production environments, it should be accessible only by the CI/CD systems without any manual intervention. Also, enable version control on terraform state files bucket in S3. If you would like to manage the Terraform state bucket, create the bucket, and replicate it to other regions automatically. Terraform doesn't support interpolated variables in Terraform backend configuration, and you will need to write a separate script to define S3 backend bucket name for different environments

- Bucket - s3 bucket name should be globally unique.

- Key - Set some meaningful names for different services and applications, e.g., vpc.tfstate, application_name.tfstate, etc.

- Dynamo DB table – optional, only when State Locking is enabled

[image 1: Backend state storage in S3 Bucket] [image 2: Sample S3 configuration]

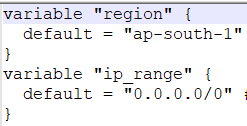

3) Run Terraform command with variable file “var-file”: This helps avoid running Terraform with a long list of key-value pairs. The var-file helps easily manage the environment (DEV/PROD/UAT/PROD) variables for different environment setups. (In the sample code here, the file is variables.tf )

[Sample variable file]

4) Use the “Updated Terraform version”: It is imperative to use the latest version of the software to avoid incompatibility issues as they are constantly improvising and adding updates. Check for the installed version of Terraform, and the system will display a caution message if you are not using the latest version of Terraform as below:

[Terraform versioning]

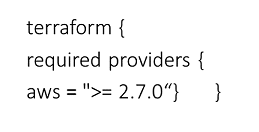

To overcome version incompatibility, the version used should be used as part of the code. Use the “required_version” setting to mandate which version of the Terraform CLI needs to be used with your configuration. Terraform will generate an error and exit without taking any further action if the running version of Terraform does not match the constraints specified. For example

[Terraform required version setup]

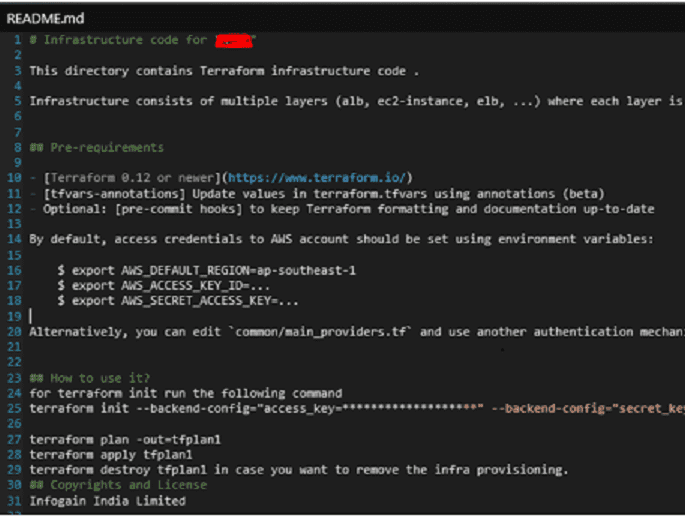

5) Generate README for each module with input and output variables: There is no need to manage USAGE about input variables outputs manually. We can capture this information in README.md files and guide the execution team about inputs they need to provide and Outputs they will receive.

[Sample Readme File]

6) Validate and format Scripts: Always validate and format the terraform scripts using the “validate” and “fmt” commands and make sure they are well formatted, validated, and error-free.

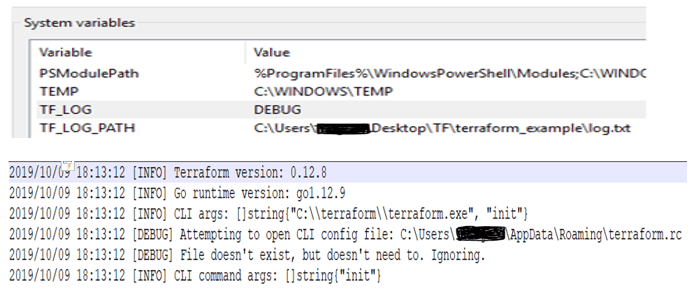

7) Turn on debugging or Trace for possible troubleshooting: In windows set the environment vars and generate Log file as below:

TF_LOG= DEBUG TERRAFORM Or TF_LOG= TRACE TERRAFORM

[Terraform debugging setup and sample log file]

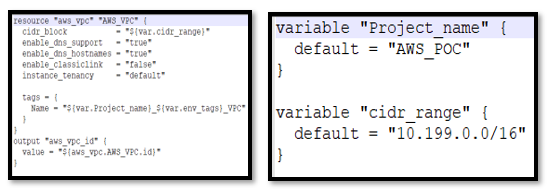

8) Avoid hard coding the resources. Never hard code resources to format Terraform configuration files and make them configurable. The values need to be stored in variables. In the sample below, variables are in a different variable file in modules.

[Configurable terraform files]

9) Environment-based setup: We should use an environment-based and modular setup for different instances - Dev, Staging, Test, and Production. Use environment tags to differentiate them from a set of similar resources and create a project-specific tag to be added to each resource to distinguish between multiple project resources. One Workspace Per Environment Per Terraform Configuration, e.g., environment in the screen below can be Dev/UAT/Staging/Prod for environment isolation.

[Environmental setup]

10) Use Terraform Enterprise version: Enterprise version offers enterprises with a private instance of the Terraform Cloud application, with no resource limitations and additional enterprise-grade architectural features like audit logging and SAML single sign-on. This could be specific to the requirements and recommended for larger enterprises.

11) Run Terraform in Docker Container: Run Terraform in a Docker container while setting a ‘build job’ in CI/CD pipeline. Terraform releases official Docker containers, which allows you to control the versions that you may want to run. This, however, is dependent on specific requirements.

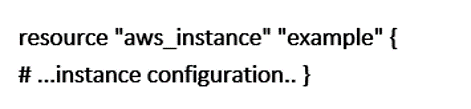

12) Use terraform import to include existing resources: Create and mark these resources, and use TERRAFORM IMPORT to add them in codes. The current AWS account id, account alias, and current region can be input directly via data sources.

Now Terraform Import can attach an existing instance to this resource configuration

Here is an aggregated Terraform structure combining all the best practices:

[Aggregated Terraform code structure]

Our cloud practitioners strongly believe Terraform to be one of the most flexible tools available that can enable us to define and deploy infrastructure in a controllable and maintainable manner, provided the best practices are being followed. The best practices also help to minimize downtime and deliver better business value and productivity gains.

Do you have questions, or would like to know more about Terraform, Cloud assessment, DevSecOps, or Digital Transformation? Drop us a line at digitaltransformations@infogain.com.